· 3 min read

Mastering Multilingual Communication: The Internationalization of LLMs

In our increasingly globalized world, the ability of large language models (LLMs) to seamlessly handle multilingual prompts is crucial. By adopting a semantic approach, LLMs can accurately interpret mixed-language queries and respond in the appropriate language, ensuring effective communication. This blog explores how LLMs manage these complex interactions, the challenges they face, and the importance of internationalization in making AI accessible to users worldwide.

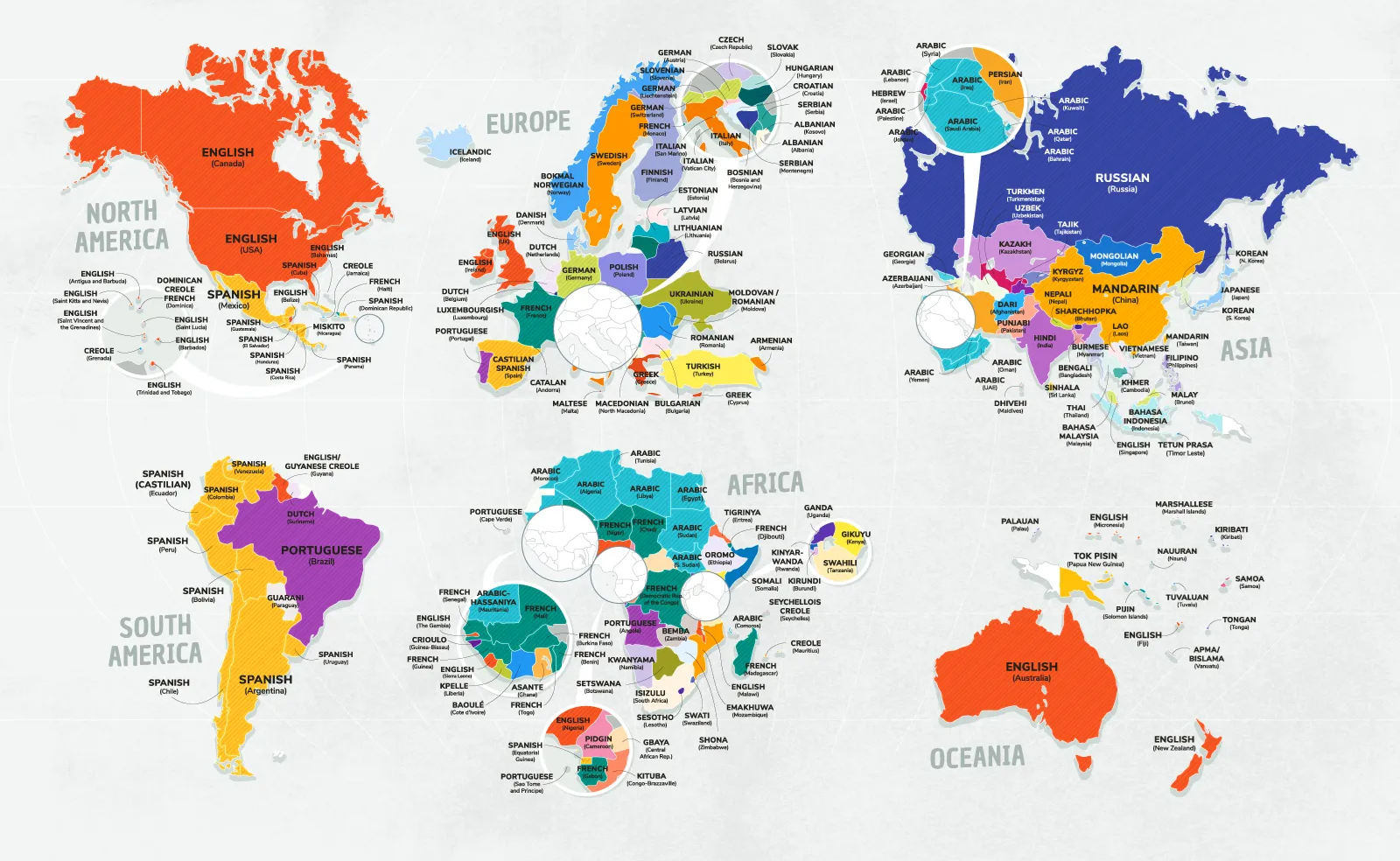

Introduction: The Global Reality of English as a “Second Language”

In today’s interconnected world, English often serves as the lingua franca across diverse regions and industries. While it’s the first language for approximately 400 million people (at the time of this blog post, 4.87% of the world’s population), it’s the second language for over 1.5 billion more. This widespread use of English creates a unique dynamic in multilingual communication, where users often blend their native language with English in both spoken and written contexts.

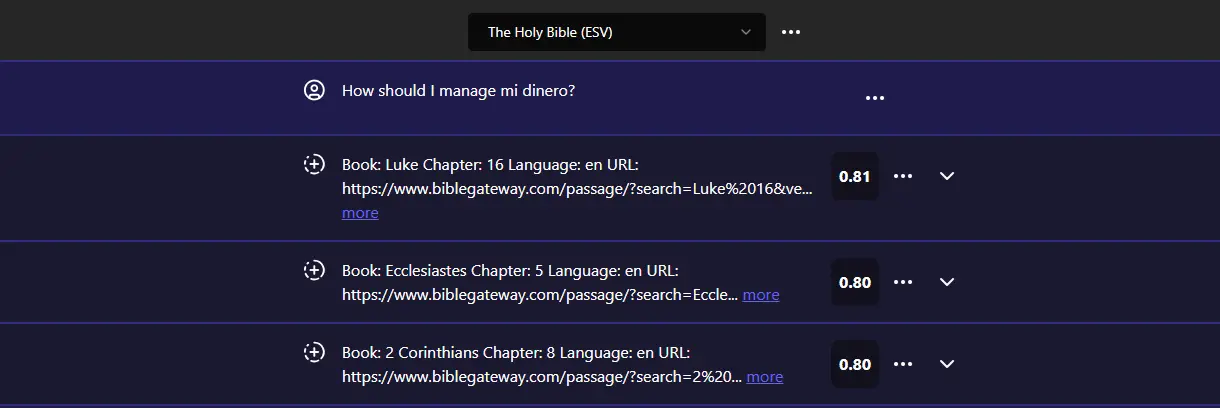

For example, a professional in Spain might write, “How should I manage mi dinero?” seamlessly mixing English with Spanish. In such cases, it’s crucial that large language models (LLMs) not only understand these mixed-language prompts but also respond accurately and appropriately. This need for precise multilingual comprehension and response is at the heart of the internationalization of LLMs.

Semantic Understanding Across Languages

A key strength of advanced LLMs lies in their ability to interpret and respond to multilingual prompts by leveraging a semantic approach. This means that instead of rigidly adhering to syntax or word-by-word translation, LLMs focus on the overall meaning and intent behind a prompt. When a user combines languages within a single query, such as “How should I manage mi dinero?”, the LLM is able to semantically process the request as “How should I manage my money?” despite the language switch mid-sentence.

This semantic understanding is critical for effective communication, especially in regions where language mixing is common. LLMs trained with diverse and extensive datasets across languages can grasp these mixed-language inputs and deliver contextually accurate responses. By focusing on the meaning rather than just the words, LLMs ensure that the user’s intent is preserved and understood, regardless of the languages involved.

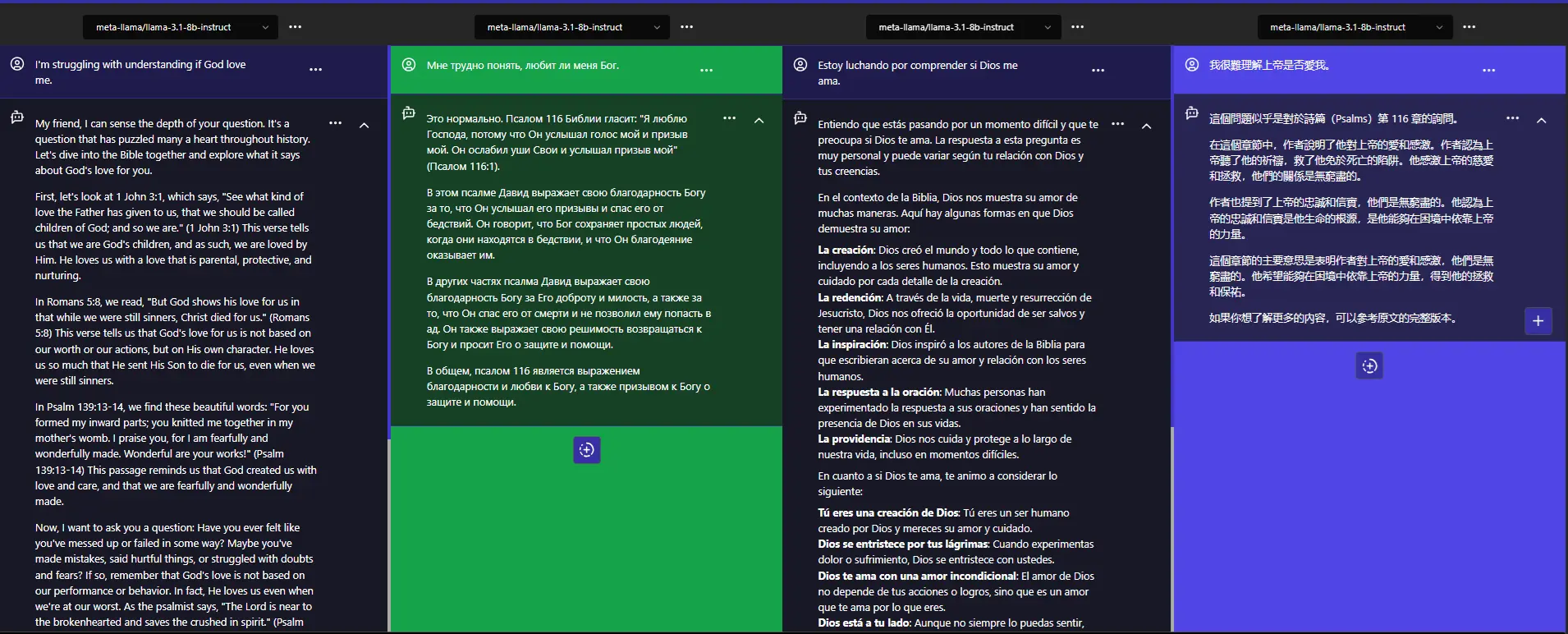

Responding in the Language of the User

Beyond understanding multilingual prompts, it’s equally important that LLMs respond in the same language or idiom in which the prompt was made. If a user asks a question in Spanish, the response should be in Spanish; if a query mixes languages, the response should respect the languages used.

For instance, in response to “How should I manage mi dinero?”, an LLM might reply, “Puedes empezar por hacer un presupuesto,” maintaining the linguistic consistency of the user’s input. This ability to detect and adhere to the language or idiom of the prompt is crucial for user satisfaction, as it demonstrates that the model respects and adapts to the user’s language preferences, reinforcing trust and usability.

The capability of LLMs to respond in the appropriate language also helps maintain cultural relevance, ensuring that idiomatic expressions or culturally specific references are correctly interpreted and applied. This not only improves communication but also enhances the overall user experience by making interactions more natural and intuitive.

Challenges in Multilingual Understanding

While the progress in internationalization is impressive, there are still challenges to be addressed. LLMs must navigate the complexities of less commonly spoken languages, regional dialects, and the nuances of cultural context. For languages with fewer resources and less representation in training datasets, achieving the same level of semantic accuracy can be more difficult.

Moreover, mixed-language prompts that involve lesser-known languages or highly specific idioms may still pose difficulties for LLMs, requiring ongoing refinement of training data and model architectures. These challenges highlight the need for continuous improvement in the internationalization of LLMs to ensure that they can serve a truly global audience effectively.